In order for our approach to provide learning at the pace we required we needed to be able to define Minimum Viable Changes that we could complete in a matter of weeks or days. We did this by breaking up our strategies into one or more transformation adoption "campaigns". Each campaign was differentiated in terms of underlying adoption approach used, a strategy it supported, or any the content being adopted.

MVCs were then designed to validate assumptions contained within a particular campaign. We needed to quickly validate if the approach behind a particular campaign could change people's behavior, and help them acquire new and useful skills at the rate required for success.

Each MVC was labeled according to a descriptive name along with one specific assumption that it was designed to validate. Each MVC contained an explicit hypothesis, along with details on how to measure the accuracy of that hypothesis.

Every MVC followed a similar measurement approach. Each MVC targeted a specific set of skills taken from the Transformation Participation Engine mentioned in the previous section. The value of each MVC was determined in terms of increased number of individuals acquiring new skills. Assumptions could then be validated by measuring actual changes in behavior, and determining if the adoption approach underlying the campaign with sound.

An example of this approach is the Kanban strategy, which was categorized into a number of campaigns. The Visualize As Is Work campaign was dedicated to visualizing current state processes, and gradually implementing WIP limits, policies and other components. There was no inclusion of other agile practices. An example of a MVC implemented for the Visualize As Is Work campaign was the setup of Functional Department Kanban Boards.

On the other hand, the Kanban/Agile Pilots campaign used a much more aggressive approach, mixing Kanban and Agile practices, such as story based development, cross functional teams, planning poker, etc. An example of the MVC implemented for this campaign was dedicated coaching and training of story mapping.

Other campaigns included a Kanban self-starter program allowing everyday staff to run their own Kanban initiatives, a hiring campaign to recruit dedicated Lean/Agile coaches, and a gamification framework that would render individuals progress in skills and behavior in a role-playing game style character sheet and leaderboard.

Using Kanban to track validated learning, while supporting a Kanban transformation

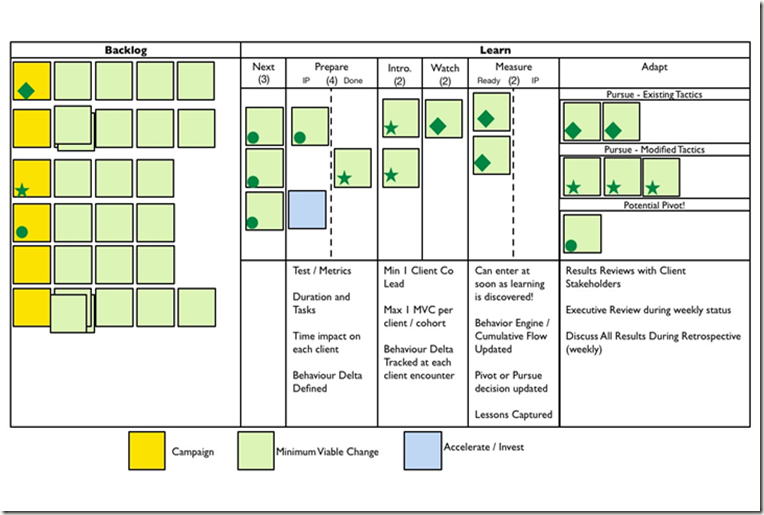

Of course to track the progress of our organizational transformation the change team used a Kanban system. During the first 3 months of this engagement our Lean Startup Kanban system changed 5 times. Our end product was much simpler than previous incarnations, and has provided us excellent support for a validated learning change approach.

The backlog consisted of numerous campaigns, each campaign being associated with a set of MVCs that could validate the assumptions contained within each campaign. The priority of a particular MVC was represented by placing these MVCs from left to right on the backlog.

MVCs were sized so that lead time would be between 1 and 3 weeks. During the preparation phase, metrics used to measure a specific hypothesis was defined, and the exact impact/commitment from the targeted set of clients was also specified and communicated.

MVCs were then introduced to a subset of the organization known as a cohort. The introduction state involved initial coaching and training, hands-on workshop facilitation and other activities. Once the client was deemed to be somewhat independently operating with the new skills introduced, we moved the MVC to the watch state.

Watching consisted of observing the behavior of our customers, and measuring specific behavior according to the Transformation Participation Engine. Once our customers had been observed for a suitable amount of time, we then measured the MVC, and determined if our outcomes matched our hypothesis.

At first it was difficult to determine when to move a MVC from watch to measure. We soon came up with a simple rule. A MVC could be moved as soon as someone from our team felt that a campaign required a change in tactics. This called for immediate action to measure the MVC that was currently in-flight, and introduce a new MVC to validate the modified approach. Often these observations preceded our measurements, we were measuring people's behavior, behavior that we had to manually observe. As a result our observation and measurement operated in tandem with each other.

Once a week we held team retrospectives, at that point we we reviewed all measured MVCs, discussed the outcomes and moved the MVC to the appropriate pivot or pursue lane depending on the results of the discussion. The decision to pivot or pursue was also typically made at these retrospectives, once we had an opportunity to review a batch of MVCs.