In my

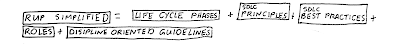

Previous postI mentioned that the rational unified process is slightly modified can offer good value to large-scale projects, in this submission I elaborate on some of the components of the process.

Product Lifecycle Phases and Skill-based Disciplines

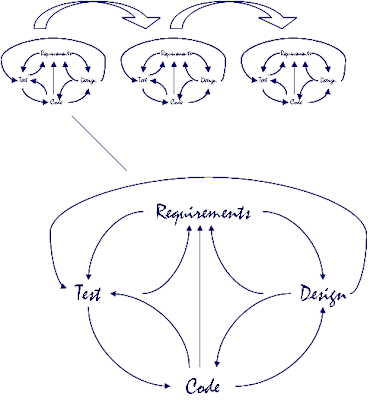

One of the biggest differentiators of the Rational Unified Process is the way that the method uses a two-dimensional grid to categorize work into one or more phases, as well as a specific disciplines or skill sets of work.

The problem with many structured development processes that they categorize work along one dimension only, this dimension is usually based on skill sets. What this in effect means is that work is organized around completing requirements, then completing design, then completing development, etc. Given that the whole waterfall approach to methodology was first described as a

anti-pattern almost 30 years ago, it's surprising how often I see software development methodologies popping up that prescribe to it, especially in the management consulting world. The RUP has at least has the good sense to realize that if you're going to break things up by phases, then create phases based on the natural lifecycle of a product.

While the RUP does categorize work into disciplines (requirements, design, etc.) the RUP explicitly states that this work from different disciplines can be conducted in parallel and that there is plenty of opportunity for the work to overlap. Furthermore, the RUP provides advice on how to break up phases into multiple iterations.

RUP Phases

RUP Phasesa brief description of each of the RUP phases are as follows:

Inception

At the beginning of a project, there has to be a vision, a good idea that will benefit the business, and there has to be some method of generating the money. Agile doesn't talk about any of this, when you start an agile project, you're in requirements design and development. Somebody has to talk about putting together the business case, looking at the solution from an enterprise technology perspective, (should the solution is Java, or.Net, how about a package like PeopleSoft?) Stakeholders need to be lined up, and a high-level estimate of what the overall solution is going to cost needs to be put together. Someone asked all sorts think about organizational change and training. Many agile projects are doomed to failure (IMHO I don't have a stat of this or anything) if they don't spend it least a couple of weeks to a couple of months (depending on project size) working on inception. Think of

inception as an

iteration zero on steroids, and work is not only done by developers, although they do need to be heavily involved on the technical side.

Elaboration

Once the team has a general idea of what they are doing, and why they're doing it, the RUP recommends to start the SDLC process (i.e. requirements, design, development, test, deploy) in multiple iterations focusing specifically on technical uncertainties, scary requirements, and generally anything that makes the developers "stay up and shiver at night". Again,

elaboration can be looked at as the enterprise version of a combination of multiple iteration zeros

, but supplemented with a comprehensive, planned set of

spiking. One of the fundamental pillars of RUP is that any large development project should contain a phase where a subset of a large development team can get together and experiment, prototype, and mitigate technical risk before applying a large-scale team to developing the entire solution. One interesting thing to note is that many companies adopting RUP confuse elaboration with design, in point of fact elaboration requires one or more complete iterations of requirements, design, develop, test and deploy to be considered complete. The difference between elaboration and construction is that elaboration is focused on

mitigating technical risk.

Construction

Once the majority of technical risk or guiding a new platform, adopting some legacy code, or understanding some complex requirements have been mitigated through a number of completed development iterations. Management is supposedly able to magically deem that all architecture risk has been eliminated, and it's time to start a brick laying, brainless, assembly-line approach to completing the solution. Now that all risk has supernaturally disappeared, it's time to expand the team, and optimize the delivery approach so that is an effective, efficient manufacturing style process. (Excuse me while I laugh hysterically) As naïve as this viewpoint is to developing software is, what is even worse is that many organizations confuse construction with the phase where all software development is conducted. According to RUP construction still requires revisiting requirements, revisiting design, and of course developing and testing. The emphasis is that more development than requirements or design should take place.

Transition

At some point in time, the solution needs to be handed over to the client. Training needs to take place, consultants need to be replaced with counterparts within the organization and the solution needs to be entrenched within the organization. This is all completely reasonable, and is something to say doesn't really talk about, and in my opinion probably shouldn't. I'm not sure I see the value in having any SDLC try to provide advice on how to fundamentally deliver a software product to an organization, it's not that I find the transition phase to be incredibly important, I just think that the RUP provides extremely superficial advice, and waters down its own strong points by trying to do too much. I personally work for a consulting company that has an extremely strong organizational change department. And trust me, software process geeks really have no idea on how to approach this One. Again, the biggest mistake organizations make when adopting RUP is to confuse transition with testing and deployment, the two have nothing to do with each other. If anybody out there is really interested in how to tackle transition, I recommend reading the Heart of Change for a start.

Compared to other methodologies RUP phases make sense but... Let's be honest, has anybody out there actually done any development work and said "hey we are done elaboration phase, it's time to start construction...". Well I have actually been on projects where we had a "elaboration team" which was responsible for putting together the architecture framework, setting up the technical foundation, and making sure that the platform was solid from a performance point of view, and could handle the various "complex" requirements. We then had a "construction team" which was scheduled to start several months after the elaboration team, which was supposed to use the "common design components", patterns and other pieces that the elaboration team that put together.

While this sounds great on paper, the reality is that the majority of elaboration work really started once the construction team had landed, it was only when they started using the "common components" to implement the "non-complex requirements" that the elaboration team really figured out how the common components should operate. In short, software development cannot be neatly broken out into elaboration and construction phases, what I see happening is that iterations tend to start out with what appear to be largely "elaboration style" activities. These early iterations tend to have more experimentation, prototyping, and spiking necessary to mitigate technical risk and figuring out the intricacies of whatever new technologies are being used on the project. Subsequent iterations tend to have less and less elaboration style work and more and more construction style work. That being said, every once in a while, a drastically new requirement comes into play, or the development team figures out a new approach that can drastically improve your overall solution but requires a dramatic rethinking of the way things are being done. In short, replace the RUP construction and elaboration phases with a development phase, and plan to have a decreasing but fluctuating amount of elaboration style activities in each development iteration.

In my next

post on this topic, I complete this article by giving an overview of RUP principles and best practices and how to modify them to make them more agile.