A couple of weeks back I had the opportunity to interview Scott Ambler. Of course Scott does not really need introduction, but for those who don’t know him, Scott has been a tremendous help to the agile community for years. He’s the author of the agile modeling method, one of the authors of the enterprise unified process, and has played a key role in helping IBM and IBM clients become more agile. Of course this is just a brief overview of some of Scott’s accomplishments.

The interview was comprehensive, and we were able to discuss a number of very important issues to both the agile and the developer community at large. I recommend taking a look. Scott has some great thoughts on the role that agile is playing, its limitations, and how to scale agile beyond the core use cases that many agile practitioners discuss. In this interview he shares his experiences on applying agile and agile governance internally to IBM as well as to his customers.

What’s keeping you busy these days?

I’m spending most of my time customers adopt agile and lean particularly@scale, and use agile to address more complex nonstandard problems. I’ve also just been working on a 2 day disciplined agile delivery workshop which will be offered to our clients started in February. The focus of the workshop will be on how to do agile delivery end to end, targeted at intermediate development teams i.e. developers and their immediate line managers

What kind of practices will be covered in the training? Will focus be on technical or management practices?

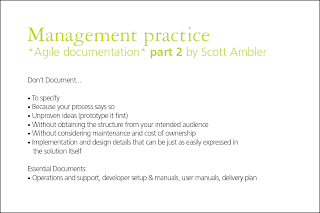

The training will be comprehensive offering a combination of leadership style material, modeling, how to do agile documentation, test driven development, and also how to incorporate independent testing into agile. The goal is to explain how to do agile delivery end to end and cover the full spectrum, not just the cool "sexy" stuff that everybody likes to talk about, but the complete set of artifacts necessary to make agile delivery successful in the real world.

Let’s circle back to that in a second, but first of all tell me about a typical day in the life of Scott ambler? How do you go about spreading the word and evangelizing agile? Both within IBM as well as to clients?

I sort of wish I had a typical day which I don’t. A lot of my focus is on helping customers. I might spend an hour or two a day on conference calls with customers, I get to work out of my house for the most part. A lot of IBMers work from home, although I also am frequently on site with customers helping them work through whatever their complicated problem of the day is. I occasionally sit on sales calls as a technical expert and have also been playing a role in the adoption of agile within IBM itself. In terms of adopting agile within IBM, I give advice to a specific internal IBM product teams on how to become more agile

What are some the things you do to specifically help IBM to adopt agile development internally? How much of this is actually working with IBM teams developing product in agile manner as opposed to helping consultants and professional services be more agile in the way they help clients build applications?

I would say it’s approximately 2/3 customer facing, and one third working directly with internal IBM.

Taking into consideration all your duties at IBM both internally and with customers, what are some of the big issues that keep you up at night? what Would you cite as your biggest challenges?

It’s usually around helping people figure out how to scale up agile and address some of their more complex problems. The other issue revolves around how to replicate myself, I have been doing a fair bit of enablement and mentoring so that I have a group of agile adoption experts who are able to further my work. I’m probably spending a good 15 to 20% of my time directly mentoring these kinds of people.

What kind of attributes are looking for in the people that you want to mentor, and even more generally, what kind of attributes you think it takes a person to help successfully drive the adoption of agile?

The standard customer facing attributes are a must, as is flexibility, and of course the person should be reasonably senior, a minimum of 10 years experience. I will work with people fresh out of school, but just not with the intention of putting them into senior adoption roles.

You have worked on some awfully big projects in the past, written some very well-received books, for example the agile modeling method, your participation in the enterprise unified process, etc., what’s the next big project that’s going to come out from Scott ambler?

Right now it’s working on finishing the agile scaling material. I’ve been working on a series of agile white papers on scaling agile. There are four papers total, the first one was the agile scaling model, which came out in December. The second one which came out in January is an executive paper covering the details of the remaining material even though these haven’t come out yet. The third white paper will talk about how to scale various agile practices, and applies the agile scaling model to show how to scale several common agile practices, for example how do you scale daily standup meetings on larger projects.

Can you go into more detail about the agile scaling model?

The agile scaling model applies various issues that you will run into when trying to apply agile practices at scale. There are two primary ways to apply the model, the first way is to take is for example daily standards, and then apply one or more scaling factors which will change the way you use the practice. As an example, the way you want to manage daily standups will be very different if you have a large geographically distributed team then if you have a small co- located one. Of course not all factors affect all practices.

The agile process maturity model was about ... "how do you take a mature approach to agile in a disciplined way" ...phenomenal push back... the agile community literally went rabid, ... of course developers aren’t always good at seeing the big picture... maybe there are reasons organizational leadership is selecting these metrics to measure..., such as keeping people in the company out of jail

Would you also agree that some of the factors don’t even apply without the presence of a scaling factor?

Yes, some practices start to kick in when a scaling factor becomes apparent. So for example if you’re in a regulatory environment, you will be doing a lot of different things that you wouldn’t even consider in the non-regulatory environment. You’ll be doing more documentation, and formal reviews will start making more sense.

What is your primary motivation for creating the agile scaling model? When did you stop and say we need this to be able to take agile to the next level? You seem to have a bit of a unique brand from some of the other agile "gurus" out there, you have chosen to directly deal with some of the "nonsexy" agile issues. When did you start down this path?

That’s actually a hard question, it started several years ago, working with customers where I start to see several common patterns, situations where the mainstream agile was actually breaking down. For example many situations where small, co-located teams just don’t work. What we were seeing where these common factors which were causing us to modify the way we are doing agile in a very repeatable way. I kept getting asked "in this situation what do you do?", and of course it wasn’t just me. We were also being pushed by a lot of our customers to look at agile and CMMI together. So we started talking about a maturity model for agile. In fact a lot of the work in the agile scaling model has its roots in our early work around some work we call the agile process maturity model. What agile process maturity model was about the question "how do you take a mature approach to agile in a disciplined way". What was interesting is that we got phenomenal pushback from the agile community, they literally went rabid, you mention the word maturity and a large part of the agile community goes nuts.

Why do you think the agile community has such a bias for the most part against maturity models?

Well I think there are a lot of questionable implementations of CMMI, the big challenge with CMMI it is really attractive to bureaucrats so what you end up with is some very document heavy processes because that’s what these kinds of people believe in. Of course this is always the case, but it’s often true in general. And a lot of developers, agile or otherwise have had some pretty bad experiences and have not appreciated it. Of course developers aren’t always good seeing the big picture, maybe there’s a reason that the organizational leadership is talking these metrics and trying to measure efficiency, maybe there’s some reasons why the integration control processes are actually more co-located and simply dropping some code into source control system, and maybe there are some reasons why people outside of the development team actually need to do reviews, such as keeping people in the company out of jail. A favorite example of mine is time management reporting, everybody whines about doing this, and rightly so, I don’t like doing it myself, but the reality is, and this is a hard observation for developers, is that the most valuable thing you might do during the week is actually entering their time and that just blows their mind. A lot of development can be put off as research and development tax credits, so properly entering time against different activities can result in a significant savings for the organization.

At the same time bureaucrats can be out of control, and messing things up.

... but nothing really in it (CMMI) that says you have to be documentation heavy, and bureaucratic... if you let bureaucrats define your process, you will have a bureaucratic process. If you have pragmatic people define the processes you’ll have something pragmatic.

What are some of the alternate ways you could use CMMI like approaches so that you can balance these things you need to do to stay out of jail for instance, with the desire to be agile?

The first thing you need to know about CMMI is there’s nothing really in it that says you have to be documentation heavy, and bureaucratic. A few of the goals might motivate you towards that, but really it’s a choice in terms of how you implement your CMI process. You can actually be very agile, and very pragmatic. What I like to tell people is that if you let the bureaucrats define your process, and you will have a very bureaucratic process. If you have pragmatic people define your process menu and up with something that’s pragmatic. So developers need to stop complaining about how tough they got it, and they need to step up and participate in defining these processes. If they don’t step up, and the bureaucrats will.

So what you’re saying is that practitioners need to accept the need for process compliance will always exist and it’s a better job to ensure that these processes and the compliance methods are acceptable to practitioners?

Exactly, many companies are only used by their clients because they have these CMMI maturity levels, and many developers wouldn’t be working for their employers if these companies weren’t CMMI certified. Hopefully these developers would have jobs at different places but maybe they wouldn’t. So developers need to appreciate these bigger issues sometimes. And again CMMI implementers need to do a lot better in streamlining things and making them more pragmatic.

What would your recommended steps be for a CMMI compliant level 4 or 5 organization that is trying to become more agile?

Or my favorite first step is almost always to recommend the organization start trying to work within short iterations, with the goal of trying to create potentially shippable software. That’s your sort of brings the reality to the conversation, an interesting observations that pretty much every CMMI level 4 or 5 organization I’ve been to is trying to become more agile. They’ve actually got metrics that tell them where they are effective where they are not.

One of the biggest challenges for services firms is when their customers are maturing to level II or level III, and they are not agile themselves and they motivate their service providers cannot be agile.

... so-called precision, and "accurate estimates"... is false.... it (accurate estimates) comes at a pretty huge cost. Which the customer is oblivious to... (estimates) creates a very dysfunctional behavior on behalf of the IT department, …I always ask them what they’re smoking.... (management and business should) start actively managing the project.

So service firms trying to get customers to give up "long-term planning precision" in exchange for the usage of agile approaches is a real challenge?

I think what customers need to realize is that the so-called precision, and "accurate estimate" that they are getting is false. They aren’t getting that at all, and when they do get it comes at a pretty huge cost. Which the customer is oblivious to because the service provider is a black box to them. And this is true even if the customer is not using the service provider, an IT departments pretty much a black box to most of its business customers. They have no idea of what the actual cost is for this demand of a accurate or supposedly accurate estimate is on the project. This need for completely fixed upfront estimate create some very dysfunctional behavior on behalf of the IT department. Getting accurate estimate is actually a very costly thing to do and knowing is very good at it. That’s one of the great myths of the IT world, the fact is that we are still not very good at estimates. Most of the time our stakeholders aren’t even very good at telling us what they want, so how can we possibly give them accurate estimate, and even if they could tell us what we want they are most likely going to change their minds anyway. So what you want to do is move away from this false sense of security and start actively managing the project. So instead of putting all this effort into getting accurate estimate upfront, actively manage the ongoing costs of the project, keep an eye on things, and be actively involved that’s critical

So what would your response be to people who come up with complex estimation models like cocomo 2 and function point analysis, and other approaches to try to put some science in estimating?

I always ask them what they’re smoking, even the estimating community is pretty transparent about the fact that they’re not very good at estimating. The best estimates are done by people who know what they’re talking about and are more than likely going to actually be involved in the project. Using them to come up with a ballpark is faster, cheaper and in the long-term is going to be more effective than using function points or whatever kinds of points you want. The challenges is that we need to step back from this wishful thinking, what I typically tell people is "how well has this approach worked for you in the past?" Sometimes people will lie to me but most of the time it becomes very obvious that this just doesn’t work. Sometimes the only way to get these accurate estimates is for service providers to basically lie, they pad the estimates and cost. If you’re going to pad the budget by 30 to 40% you’re saying your "scientific" estimate is anything but.

... you can come up with a fairly good guess (for estimates) fairly quickly... …just as accurate as function point counting... you can always refine... check to see if you’re on track... if not make changes you need or shut down the project.

So how do you we can file this reality of estimation with the need for the business to have an understanding of cost? The business will have some concept of value, it may be intangible, but in some cases is reasonably well-known and they want to contrast this value with what delivery will cost them as a business? How can you communicate to the business that they can’t get an exact cost?

Well I will walk them through what’s happened in the past, because the business is smart, they know that these estimates aren’t any good. They keep hoping for the best, so I try to get them to see reality. That’s not to say there isn’t some benefit to getting a cost initially within the plus minus 30% , but we can do that pretty quickly, we don’t need to create complex models and function points, that’s a phenomenally expensive thing to do just to get an initial estimate. And it doesn’t give us very good accuracy. If you can get a few smart people into a room, and talk through what the scope is, talk through what your architecture approach is going to be, talk through the requirements then you can come up with a fairly good guess fairly quickly, and that’s going to be just as accurate as function point counting is going to be. You can always refine your estimate as you go. For instance if you are 3 months into an 18 month project, and you have actually done some development work, you can check to see if you’re on track and if the team is producing the value it’s supposed to be producing, and if not you can make the changes you need or shut down the project.

So what you’re saying is essentially set up a framework where you fail fast and fail cheap?

Exactly that way there is opportunity to spawn a couple of projects that might be worthwhile, you can continue with the things that are successful, and shut down the things that aren’t. I run a survey for Dr. Dobbs, part of this was a project success are very well I asked participants how many of them typically canceled projects that were failing and only 40% said that they actually cancel projects that were in serious trouble. That is obviously problematic, if a large number of projects fail, then you should be trying to cancel and as soon as possible. Effective project management should be focused on getting projects out of trouble or killing them right away, but a lot of organizations have this dysfunctional behavior where they are not allowed to do that.

Go to conferences, user groups and talk to other people,... others are having the same problems that you do. Nobody is pulling this crap off.

So it’s interesting that on one spectrum there are companies like Google, Yahoo and even Wal-Mart that are fail fast, fail cheap, experimental, they do a lot of different things and on the other hand you have traditional enterprise organizations which are traditional. How do you move some of these companies over to the other direction where it is actually okay to fail?

That’s a problem because it’s a huge cultural challenge, culture is one of the scaling factors in the agile scaling model, you need to make people how things are working or aren’t, however you can take a horse to water but can’t make him drink. I’m basically an evidence-based guide so I try to get them to observe that the current approach is not working out very well for them. A lot of people know that inherently the traditional approach isn’t working out very well for them but they don’t want to admit it or they don’t know that there are better options, than I start sharing evidence that it’s not really working out for anybody else either. One thing I tell people is go to conferences, go to user groups and talk to other people so that you have a better understanding of their experiences in the industry and you will find out that others are having the same problems that you do. Nobody is pulling this crap off. It’s really pretty shocking when you talk to other people and find out that everybody is frustrated for the same reasons and nobody has it right. So let’s start doing the things we know that work, and start abandoning the things that aren’t working for anybody.

Do you think today’s economic conditions have done anything to help spur interest in agile? Forcing IT organizations try to be more effective at delivering software for less?

I would actually say that it’s neutral, the problem is that in order to be more agile or more lean actually requires investment from these organizations, IEEE to spend money to save money. These organizations are tight for money so they want to improve things but to improve things you actually need to spend. Some organizations are actually figuring that out, but some are waiting until they have some budget.

(I am most proud of) my work on agile modeling,... a tough topic before it’s time,... simple solutions like writing stories on index cards are nice,... people are doing a lot more modeling than that. …agile modeling appears to be more popular than TDD... 80% of agile teams use some form of upfront modeling,... it’s not part of what the agile community is "allowed" to talk about... there’s some significant dysfunction in the way that the agile community communicates...

With all the publications, books, etc. things that you’ve worked on, on a project that you’ve been on, what strikes you as being the one thing that you are most proud of?

I would say my work on agile modeling, I took a tough topic that was probably before its time, and what’s interesting is that in the last couple of years I have been seeing more and more references to the work, and more and more people starting to get it. I get a phenomenal number of hits on that site, as more and more people are starting to scale agile and move out of the small co-located situations, modeling becomes more important. Simple solutions like writing stories on index cards are nice, but when you actually step back and observe what people are doing they are doing a heck of a lot more modeling than that. In the surveys that I run, agile modeling appears to be more popular than TDD even within the TDD community, which I thought was strange. Forrester just ran a survey a while ago and agile modeling was rated as being more popular than extreme programming. To be honest I question the way they worded the survey, but I would not have predicted anything like that at all.

Personally I’m a big fan of the agile modeling method stuff from years ago, he think it’s because AMM is really about a set of processes that are pragmatic and make sense, so people are just doing it without even realizing it’s AMM, unlike some of the other more strongly branded practices like scrum or XP?

Yes, branding is a good word because practices like XP and scrum, and particularly scrum have become a brand. But modeling is just not what the S. sexy by the developer crew, so something like XP which has almost no modeling took off with very little marketing. It became popular really quickly because it sounded cool and it really appeal to developers

So Developers like it for the same reason that the business hated it (XP)? Visions of programmers on snowboards?

Exactly. But seriously the challenge with XP is that it requires so much discipline to pull off is that even though everybody wanted to do it very few people are actually able to pull it off. So agile modeling didn’t have any marketing, we didn’t put a bunch of certifications around it, and there is no scam around it. And it was competing against the simpler messages like user stories, and while user stories are great it’s not enough to get the job done. But that’s just not what people want to hear. So one thing I do is run surveys to find out actually what people are doing in practice. Even though they may not be talking about it, and I’ve been criticized for that. But these surveys tell me 85% of agile teams do some form of upfront requirements and upfront modeling. It’s rare to hear agile teams talk about this, it’s not sexy or not part of what the agile community is "allowed" to talk about and not part of the agile culture. But I think there’s some significant dysfunction in the way the agile community communicates. There’s these taboo subjects, things that agile people aren’t supposed to talk about.

(The agile community) over focus on hard-core developer stuff, ...they don’t talk about the not so sexy stuff, something about this engineering mindset that wants black-and-white answers. Most of the world doesn’t work that way... they are fundamentally not getting the job done.

It’s my opinion that there’s a fair amount of retoric that comes out of the agile community that’s almost self-defeating, how would you characterize this? What are some of the big limitations of the agile community?

There is an over focus on hard-core developer stuff, which is good, you should take pride in your work. Agile provides some good process for developers to rally around but there’s this huge focus on development, and they don’t talk about about the not so sexy stuff, like documentation, like requirements analysis, like testing, even though they are usually doing it. Developers need to get away from some of the agile marketing, I don’t know what it is about developers that they seem to fall for this blatant marketing stuff.

So they are prone to Puritanism?

Yes, there’s something about this engineering mindset that wants black and white answers. Most of the world doesn’t work that way, software is mostly an art not a science, some of its lines but very little of it is. Developers tend to overly focus on technical problems like what the next version of the JDK going to be, which is interesting, but not crucial. In a couple of months another version will come out with the facts in question. So developers will spend off a lot of time learning the chassis of the technology, but not spend time to learn more business oriented skills such as how to develop a good user interface which is what their actual business users want. So often they are fundamentally not getting the job done because they’re focusing on technology over needed it.

... there’s a lot of good stuff in scrum ,..but it’s very limited.... it’s overly simplistic... this concept that you can take a two-day course and become a scrum certified master, people fall for this marketing stuff. It completely cheapens it. (The agile brand)

You raised an interesting point about some agile practices employing "blatant marketing", I take it you’re talking about scrum? You been a vocal critic of some of the practices of scrum? Where do you think scrum has gone wrong? What are some the things that you feel they are doing is harmful to the community?

Well first of all there’s a lot of good stuff in scrum, the ideas are great but it’s very limited. The focus on scrum is project leadership and requirements management and some stakeholder interaction for the most part, which is important stuff. But it’s overly simplistic, which is okay when you’re in simple straightforward situations simple approaches work. Also the scrum certification approach has helped to popularize agile and that’s a good thing. But this concept that you can take a two-day course and become a Scrum Certified Master, I mean come on. But people fall for this marketing stuff.

Do you think this certification marketing cheapens the agile brand?

It completely cheapens it. A friend of mine is a respected member of the agile community, and he’s got his doctorate. On his business card got two designations his PhD, and his CSM, one right above the other, as if these things are even remotely equal to each other. As if spending five years doing her PhD is equal to spending two days in the classroom trying to stay awake. And he does this because his customers want people with a CSM designation, those customers not realizing that this is just a two-day course. I mean so what. Also the "scrum but" phenomenon is utter nonsense. About a year ago I was on a panel with a scrum expert, so basically need, the "radical" and a bunch of other experts...

the typical scrum but rant,... you had to do everything (in scrum). Instead of listening to the marketing retoric... ask them (the audience) what actually works in the real world. ...scrum but is basically marketing ,...in the hopes that you’ll take the (scrum) course and become a Scrum Certified Master.

The "unnamed" radical?

Yes exactly, and somebody in the audience acid was possible to adopt some of the practices of scrum but not all of them. And the scrum leader jumped in and said that that was a bad idea, the typical scrum but rant, saying that you had to do everything. So I am shaking my head when I got a chance to speak I basically said okay fine, instead of listening to the marketing rhetoric let’s go to the audience and ask them what actually works in the real world. Does anybody in the audience actually benefit from having short iterations? What about a leader that focuses on mentoring and coaching and not just traditional project management? What about standup meetings? In every instance hands went up. So it is possible to benefit from adopting just a couple of these practices, sure there is some synergy in adopting more, but you can still get benefit from adopting these things on a one-off basis. So the scrum but stuff is basically marketing, to try to get you to adopt all of the practices. In the hopes that you’ll take the course and become a Scrum Certified Master. So what you’re seeing here basically is marketing taken to the extreme.

So on one hand scrum has been criticized as being too simple, on the other hand RUP has been criticized as being too complex, he figures unknown ground between what scrum is doing and what RUP is doing?

Yes, the challenge with scrum is you have to figure out what to tailor in, and the challenge with RUP is that you have to figure out what to tailor out. Either approach puts a lot of onus on the person using the framework. But as a middle ground, which is something that the open UP community tried to do. They’ve done a really good job, of course there is some bias against them from the agile community because of the bias against RUP.

So would you recommend something like open UP for customers that are trying to get to a disciplined agile approach?

Yes, it’s not perfect, but it’s got a lot of good things in it. What I found is that many times I’ll go to clients who are trying to agile and then had to invent many of the things that are in open UP. And they spend an awful lot of money doing it.

One of the charges against the agile approach is that a lot of the tooling doesn’t actually work easily with mainstream vendor supplied solutions. For instance it’s challenging to do test driven development using an end-to-end SOA suite provided by TIBCO, and it’s next to impossible (at least out of the box) to do this type of development for solutions using packages like SAP or Siebel. Is this a real challenge, and do you think it’s being addressed?

This is a big challenge for the agile community, a lot of tools are open source, and very good at doing what they are supposed to do. However it’s one thing to build a tool in isolation for a specific purpose, it’s much harder to build an integrated toolset that covers an end-to-end lifecycle and a number of different situations.

For instance the Jazz platform by IBM has been in development for several years by some very smart people, and it’s integrated toolset that covers the entire application lifecycle.

... some people would say that you need to do a fair amount of upfront requirements (for package/cots)... … that’s a bit of a myth, typically there aren’t that many options to choose from... … you can get to an answer fairly quickly. ...package (people) needs to be encouraged (should you agile)... ... this is a cultural thing

Would you recommend that people doing ERP like development such as PeopleSoft or Siebel try some of the agile approaches even though the tooling might not be there currently?

Yes, I wrote a column on Dr. Dobbs about this about a year ago, some people would say that you do need to do a fair amount of upfront requirements so that you can choose the right package, but that’s a bit of a myth, typically there aren’t that many options to choose from in a particular problem space and you can get to an answer fairly quickly. It’s also very possible to release package based changes using an iterative approach. I think the package community needs to be encouraged to try out some of these techniques as they would certainly benefit.

So again this is really a cultural thing that so many package implementations don’t use agile?

Yes, this is a cultural thing again one of the things I said in my article is that the community could learn a lot from agile. For instance when somebody is buying a package from a vendor when the first things I would ask is whether is the automated regression test suite. If it doesn’t exist I would ask why not?

Certainly most packages don’t have this kind of functionality?

Exactly, and a lot of package implementations run into trouble, and not just because they don’t have test suites, but it’s certainly not helping. We need to raise the bar on our vendors and ask them if they don’t have it on a test suite how can they be really sure that all of their functionality works? And how as a client and I going to test this when I need to integrate it with my stuff?

Can you think of some pragmatic way that let customers a package software can start moving towards this approach?

Well certainly customers of COTS solutions should be sure to put automated tests around extensions or customizations they implement on top of the package. But the need for an automated test harness should be in the actual package RFP, this should be a fundamental requirement of any package.

...certification scams are an embarrassment, ... (the agile community) have a tendency to reinvent the wheel, ... agile doesn’t typically talk about the end-to-end lifecycle.... these activities need to be talked about in a more mature fashion than they typically are... ... idea that most projects can start after two weeks just isn’t realistic.

What do you think are some of the biggest failings of the agile community? The agile community has done a lot of great things but where have they really missed the mark?

Again the certification scams are an embarrassment, and they need to stop. We also have a tendency to reinvent the wheel, it’s interesting to watch how the agile community will come across some new unique technique, but then when you look you will see that this has been in RUP or even CMMI for years. And these techniques were in other things before that.

Could you give an example of something where the agile community invented something that was not really new?

A couple of years back the XP community came across a day of building an "steelframe" basically a working reference or skeleton of a system. This is an idea that’s known as the elaboration phase and has been in RUP for years, it’s really nothing new. The RUP has always had the notion that you need to focus on validating your architecture before you go into construction. It’s still working software, and still has value, but you’re just reordering your work to make sure that the architecture is valid.

Another failing is the agile doesn’t typically talk about the end-to-end lifecycle. I was on a conference where a scrum member of the panel was saying on their project or coding from week one, then somebody from the audience to stand up who’s actually on the project and called bullshit, apparently there have been six months of prototyping requirements done before scrum had even started. These activities need to be talked about in a more mature fashion than they typically are by the agile community. This idea that most projects can start after two weeks just isn’t realistic. When I did my survey I found out that many agile projects took several months or more to get going. The initial stack of user stories need to come from somewhere, someone has to decide how to fund the project or if the project with funding, even if it’s just a small co-located project somebody has to find the room where everybody’s going to sit, these things all take time.

...lean explains why agile works. It also provides a philosophical foundation for scaling agile...

Lean has now come out as the new popular buzzword in the agile community, it has a much more end to end lifecycle connotation, do you think the focus on lean is a good thing? Or is this just another buzzword?

I actually think there’s a lot of good stuff in lean, in many ways lean explains why agile works. Things like deferring commitment, eliminating waste those principles really hammer home why agile works. It also provides a philosophical foundation for scaling agile, the principles are really good, frequently when I come across organizations that are doing a good job of scaling agile they have a good mixture of organizational principles, some of which come from lean some of which are their own, but the idea that they’ve got some grounding principles remained constant.

Would you say that any process framework needs to be grounded in these principles first?

Absolutely, take XP they have foundational value statements. Agile modeling has the same thing, as does RUP with it’s 6 principles. The processes can never tell you in detail what to do and even if they did it doesn’t matter nobody is going to follow it anyway. People are smarter than that. When you run the thing that the process doesn’t cover you should always be able to fall back on the principles, and this is what lean really brings to the table, a set of principles that really make a lot of sense.

So Lean it also brings a complete view, not just focused on developers?

Yes it brings a complete picture, all the work necessary to create a product, not just development.

...a lot of inertia in these (QA) communities, inertia with the (QA tooling) vendor, ...the QA group has been so underfunded and downtrodden, that they haven’t had a real chance to come up for air. ... some members of the agile community might not have the most welcoming attitude to people from the QA group.... should be getting these QA people into their teams for their testing skills, ...agile rhetoric can get in the way...

Who are for the biggest resisters to agile adoption? What are the kinds of people that typically block agile?

Quite often it’s the typical 9-to-5ers, but in a lot of places these kinds of people don’t exist anymore at least in IT. Sometimes when working with clients will come across people in the project management group or the database administer group that are in complete denial, especially the database group. The isn’t particularly a lot of leadership especially in the process are, in many ways this group is stuck in a rut from the 70s. And some other organizations it’s their quality assurance group would still insist on these big detailed specs extremely early on in the project.

You raise an interesting point, the QA group seems to be on a really different track than the agile one, if you go on forums or user groups is very much about testing separately, testing and the large using big vendor tooling, not a lot of talk about integrated test driven development approaches. Do you have any insight as to why that is?

While there is always going to be some need for external testing, such as penetration testing. But there is a lot of inertia in these communities, inertia with the vendors, and inertia with the people themselves. A lot of it has to do with that the QA group has been so underfunded and downtrodden, that they haven’t had a real chance to come up for air.

So would you attribute this lack of "fresh" thinking to a general lack of funding and a lack of focus on quality in general?

That’s a big part of it, also some members of the agile community might not have the most welcoming attitude to people from the QA group. This whole idea that because developers do TDD that they don’t need testers, is a good example. Agile developers should be getting these QA people into their teams for their testing skills, so some of the agile rhetoric can get in the way sometimes.

...most IT governance is dysfunctional. Good governance is about motivation and enablement.... you don’t tell knowledge workers what to do you motivate them, and make it easy as possible for them to do the right thing. ...many (developers) have had to play the role of governance blocker, ...all these instances of people pretending to follow governance and they’re really not, ... (developers) successfully blocking them, who else is also so successfully blocking? How many people are basically pulling the walls over their (governance) eyes?

... developers need to be educated that this (governance) is about getting the job done, using the most pragmatic way possible...... client had a multi-month architecture review process,... if I was the boss I would fire them, instantly...…governing developers is like herding cats, which is actually phenomenally easy, ...wave a fish in front of the cats, suddenly they’re really interested…

Agile governance has been discussed as an alternate from the typical command-and-control approach. What’s your elevator pitch for those who aren’t in the know about agile governance?

First of all, most IT governance is dysfunctional. For all their talk on measurements and metrics these governance groups never have very good numbers to show their own success rates. This is usually the first sign that something is going very seriously wrong here. Good governance is about motivation and enablement. The first thing that governance needs to realize is that IT workers are really knowledge workers and you don’t tell knowledge workers what to do, you have to motivate them, and make it as easy as possible for them to do the right thing. The problem with the command-and-control approach is that it adds another layer of bureaucracy, and makes it harder to get things done. So what happens is that knowledge workers will do just the minimum work necessary to conform to whatever crazy governing strategy is currently in place. That’s just not the way to do it, if you want your developers for example to follow some particular coding conventions, formal code reviews are just going to foster alienation. Give them the tools to validate their coding approaches in real time, and work with the developers to help them develop a coding convention in the first place. Promote things like collective code ownership and pair programming, those two things alone will do more to promote good code than code reviews will.

I have been to a number of conferences where I asked the audience how many have had to play the role of governance blocker, meaning that they were responsible for churning out documentation just to make external people happy and give the appearance that they were complying to some crazy governance framework. In every case a large number of people put up their hands, then I asked "how many of you people actually got caught", and everybody put their hands down. So all these instances of people pretending to follow governance and they’re really not. On one project I was working on for a client, the customer asked what was working and what wasn’t. This project was phenomenally successful in the eyes of the client. But I had to be honest and let them know that out of 10 people in our project, we had 2 people who were dedicated just to churning documentation. Documentation that was not used by any of us, and was built just to shut these people up. So that means we had a 20% overhead, overhead they could have been dedicated toward the invaluable things, like automating testing or re-factoring the code so that it had better quality. Even worse what it means is that there was a group of people, the bureaucrats were not only not adding value, to actually taking value away from the team. The client actually didn’t appreciate this, but sometimes I do appreciate dishonesty. I mean we were successfully blocking them, who also successfully blocking them in their organization. How many people are basically pulling the wool over their eyes?

...instead of having all these reviews and controls, a lot of these things can be automated… ...we have tools to help you manage and monitor your process improvement.... self-help checks and retrospectives... how well they are doing with process improvement

(these tools give) a very good handle with these groups on what the quality is, what the time-to-market is going to be, project status and stuff like that. Management has the information they need to tell if the team is in trouble right away or whether they’re doing well,

So imagine you’re a governance body was trying to enforce some constraint, say that you had to use "widget A" which maybe more or less useful in different situations. The conventional governance approach would be to conduct a review on the solution to ensure that different teams were using the widget, what would be alternate approach be?

The approach here is to tell teams that the default way is to use widget A, however if the team uses an alternate approach, they should be able to if they have a good story. But instead of having the review in the first place, it’s probably better to have some principles in place that say that our developers are not in the business of building fancy widgets, they are in the business of being a bank, or an insurance company or a retailer. Developers need to be educated that this is about getting the job done, using the most efficient way possible, but there still needs to be some room for creativity. But instead of having all these reviews and controls, a lot of these things can be automated. I was working for a client that had a multi-month architecture review process, regardless of the size of the project, holy crap. I mean how these architects justify their existence, because if I was their boss I would fire them, instantly. These people have to learn how to be pragmatic. The whole idea of lean governance is to streamline these things and look at behavior. People will tell you that governing developers is like herding cats. Well actually herding cats is not hard if you understand what motivates them. In traditional command-and-control you’ll send attached a couple of memos, maybe make them go under a couple of reviews, and the cats will ignore you. The cats are just going to sit wherever it’s the most warm, but getting cats out of a room is actually phenomenally easy, if I wave a fish in front of the cats, suddenly they’re really interested in me. So all I have to do is throw the fish into the next room and all the cats will go after it. I then close the door behind them and I’ve achieved my goal.

So what do we do to motivate developers? Or QA, or anybody in IT?

Knowledge workers are motivated by pride of work. Allowing them to do good work and be recognized for doing good work is important. Most developers actually want to do the right thing and most of them understand that they’re working for a company that needs to make money, they understand that there needs to be a margin on the software they produce. I suggest taking the time to educate developers on the fact that coding conventions could save the company up to 10% on revenue will help motivate them to take part in making these coding conventions. Developers will understand this if you approach them in the right way. Likewise if you’re in a regulatory environment, we need to do more documentation just to keep ourselves out of jail. Likewise if you’re working on a mission-critical system you need to do more testing, and the development cycle is going to be slower.

... developers need to realize that ...they are being governed, and destroy. They need to understand the goals of governance, ...if developers can get to the root cause, then they can say "hey there are other ways to achieve these government goals".

A lot of people think governance is something you do above and around everyday work. How do you enable an environment where governance is everybody’s job, and everyday practitioners are responsible for owning governance, how does that scale?

First of all developers need to realize that even though governance is a dirty word in their community they are being governed, end of story. Developers have let bureaucratic people define the governance approach, so they have ended up with a bureaucratic governance approach. The reason developers are so ticked off with governance, is that they let the bureaucrats define governance structure. They need to get involved directly themselves. They need to understand what are the goals of governance, what are the principles, where is the value? Developers could then say hey there are number of ways to achieve these goals that are pragmatic. For example,

Developers need to realize that they are going to be monitored, and they’re often in the denial of this. You can have all the agile processes and daily standups that you want, but somebody, either you or your manager is going to be asked to scrub this and present this at a status meeting. Of course this comes off as a phenomenal waste of time, it takes a lot of effort to produce the status reports. So instead of denying the need for these things you can start using automated and integrated tooling to help you do your job. Again, jazz and things like RTC can help you automate some of these governance requirements.

MCIF (allows) organizations take a look at client software development processes and how to improve them.... we have tools to help you manage and monitor your process improvement.... some metrics should only be consumed internally by the team, and almost always the process improvement metrics are those ones. ... rational insight can allow you to record daily activities... (using) very accessible workbenches, ...you have some hard metrics that management can look at to track effectiveness, but you also have some softer improvement metrics that are only consumed internally by the team.

So you referred to some of these "next-generation" tools by rational that can help scale agile? Can you go into more detail?

First of all their something called MCIF, which is something that helps the service organizations take a look at clients software development processes and how to improve them. It’s not specifically agile, but most of the core content is either agile or lean because that’s where a lot of the process improvement is coming from. There’s two aspects to the toolkit, the first one is initial assessments were service organizations can work with clients to figure out what they’re trying to achieve, or other objectives, what the challenges are. It’s your standard assessment where you end up with some really good thinking and really good advice.

The second part of the MCIF is execution, we have tools to help you manage and monitor your process improvement. We have a number of tools that help you not only do self-help checks and retrospectives. But also actually allow you to track what they’re doing. How well they doing with agile or other process adoption, and how effective is it. We want to give teams evidence to prove and measure that the process improvement is actually creating value. We have a tool called rational self check that helps organizations do this. A strange observation that we (IBM) have made is that some metrics should only be consumed internally by the team, and almost always the process improvement metrics are those ones. What we have observed both within IBM and within our customers is that when you report the process improvement mentor up the food chain, all of a sudden the objectives become to look good, rather than becoming good. So if you don’t report software process improvement metrics the objectives of the team stay on becoming good and actually improving.

So how does management to get a good sense of how teams are doing if management doesn’t get to look at these metrics? Does management ever get to look at these metrics?

They shouldn’t be looking at the MCIF metrics, they should be looking at other metrics. On the jazz platform, you can track and start reporting on a number of "software lifecycle" transactions using a number of IBM products that give you very accessible workbenches, as an example rational insight can allow you to record the daily activities of IT workers who are producing software and create each project workbenches that give visibility into what’s actually going on. This allows us to track things like burn down charts, build status as well as various projects and quality metrics etc. This approach allows us as an organization to be more efficient, and also gives management the metrics they need to effectively govern.

So essentially, you have some hard metrics that management can look at to track effectiveness, but you also have some software improvement metrics that are only consumed internally by the teams?

Yes, and you also have some very robust access controls, so that you can control who is exactly looking at what, but effectively how teams are trending along practices should remain private.

So if you’re never showing management the direct result of these software improvement initiatives? How do you justify these initiatives?

Management should be focusing on the goals, so the goal is to become more productive, or deliver less bugs, and there should be specific metrics to measure that. Managers should have their own set of more business oriented objectives and that’s what insight allows you to measure. These metrics that are generated by the various products, and rolled up into something consumable. We actually use this internally to manage our own software groups. We’ve got a very good handle with these groups on what the quality is, what the time-to-market is going to be, project status and stuff like that. Management has the information they need to tell if the team is in trouble right away or whether they’re doing well, and they won’t have to attend all the standup meetings directly. This automation is really important, my general rule of thumb is that I question any metric that is manually generated. Certainly there are some metrics that are hard to generate automatically, things like stakeholder satisfaction are hard to get. But for the most part most metrics can be automatically generated if your tools are sophisticated enough.

So in your lean governance paper you talk about how to set up an environment that constrains developers in the way it pushes them into doing the right thing.

Yes, a combination of education and then motivating these people do the right thing. And part of that is making it as easy as possible for them to do the right thing. A big part of this is automating as much of the governance process as possible

How do you answer the charge that it is impossible to "do agile" on a particular type of project? Whether it’s package, integration, etc.?

Ultimately when someone tells me that you can’t do something a certain way, what they’re really saying is they don’t know how to do it. Sure there are some situations where agile doesn’t make a whole lot of sense, but they are actually very few and far between. The challenge is that if you fall for the mainstream agile rhetoric, then you really see a focus on co-located teams, and a lot of talk about the core agile practices. If they want to actually be applicable to more complex domains, more complex team structures, they need to start addressing these issues with other practices, or the more complicated flavor of agile. If you listen to the agile rhetoric around "we don’t do modeling up front, and we don’t do documentation", the agile community is pretty much shot themselves in the foot in terms of addressing even remotely interesting situations.

(Architects and managers should) help people solve problems, ...get involved and be somebody that the team actually wants to go to... Look at your job as one of basically serving the people doing work.

So what some tactical advice that you could gives you a senior manager, or a senior architect, one who has all pile of projects that he is responsible for, and he’s trying to effectively manage them? How would you help them to become more relevant to the work that is actually going on? How does one do that and at the same time keep the high level view necessary to doing his job?

Help people solve problems, every team has a challenge and is always struggling with something, engage that team, roll up your sleeves, and help that team solve whatever problem they are having. Those problems could be the need for more resources, better facilities, whiteboards, or even some relief from creating all this unnecessary documentation, etc.. If you are a architect, start helping teams by pointing them at specific frameworks already exist, existing examples etc. Instead of just creating all these models and partaking in reviews, get involved and be somebody that the team actually want to go to. Basically as a manager, even at the highest level, you need to look at your job as one of basically serving the people doing the work. Being more of a coach and a leader, be a resource, don’t try to manage them. Don’t try to be a cop. Actually help them, and make yourself useful.

Actually that’s a great closing line, thank you for your time here

Thank you.