We are

currently transforming a large organization with 200+ people to adopt new agile

behaviors. The transformation started off with identifying 3 pilot projects to

be the first adopters of agile skills and techniques.

The 3

projects started their agile journey with creating a story map to organize and

visualize their business requirements. The story mapping technique enabled the project teams to decompose their projects into features that can be managed

independently. As a start, they used their current business requirements

document and transformed it into a story map. The story map was then used as

visual tool to drive discussions with business on feature creation and

prioritization.

After

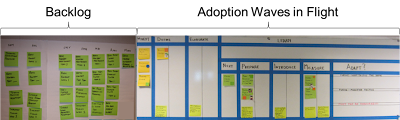

decomposing the project into features, the teams created their Kanban boards

with policies to manage the flow of work through the system. The teams’ set up

regular cadence for standup meetings to provide updates on tickets, raise and

resolve risks, issues, and blockers.

The new

approach received positive feedback from business as a result of engaging them

in more frequent discussions. Business is now working closely with project

teams to get acquainted with the new tools and processes. Currently they have

setup regular meetings with business to address business and IT perspectives on

features and how they will be grouped into MMFs. The new processes and Kanban

have provided project teams and business a collaborative and transparent

approach to manage their projects.

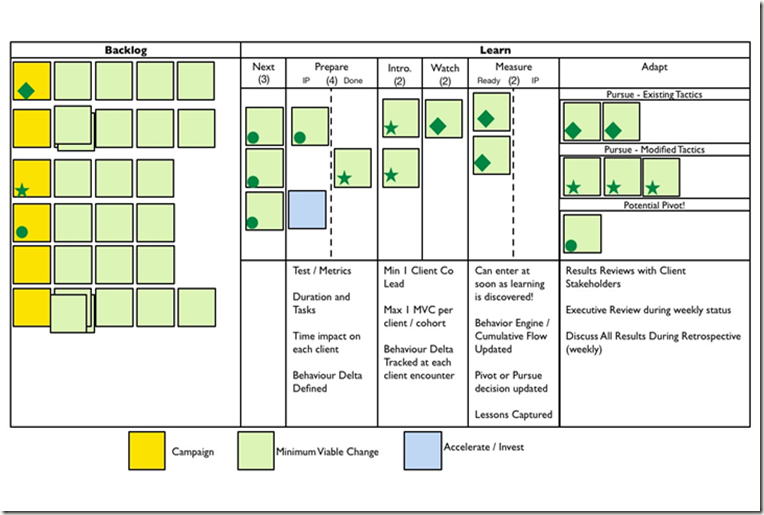

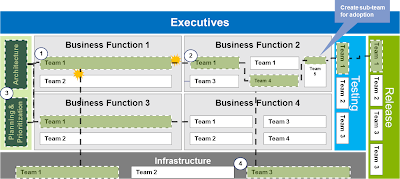

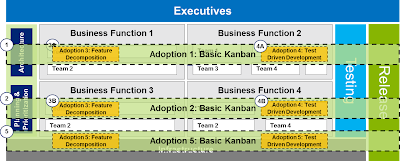

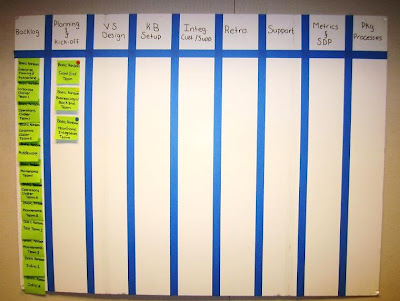

Below are

pictures from project Kanban boards and the team’s feedback on the new process

and Kanban.

Pilot Project 1

- “The story board has been instrumental in identifying gaps between the requirements and potential solution.”

- "Although our project is still very early in the Kanban process we have found definite value in the visual and collaborative aspects of the model.”

- “Having standups helps to ensure everyone on the team is familiar with the state of the project, what issues are currently open and also creates an opportunity for everyone to brainstorm and provide input when required.”

- "Main challenges that I have seen are firstly trying to learn and pilot the model in a medium/high complexity project (learning curve) as well as adjusting everyone's views to how the project would be approached compared to a standard waterfall model.”

- "Trying to retrofit a project that started as waterfall is challenging e.g. high level requirements doc (updated, revised) in progress when Kanban training started; developing project plan with business partner who had not yet been exposed to Kanban, and moving forward adapting to Kanban technique.”

- "Learning and application happening at the same time (don't feel there is sufficient time allowed in the project timeline to 'practice' techniques/adjust our processes before putting it in front of the client; making mistakes as part of our learning can affect our credibility, especially in a culture that traditionally is not failure/mistake-tolerant.”

Pilot Project 2

- “One could easily understand the work flow and identify the bottleneck to re-distribute the work load.”

- "It makes work more interesting and more transparent.”

- “It also helps me to find where is my position in this project and the relation between my tasks and others, and who will be affected if the task cannot be delivered on time.”

- “The most challenging part is defining meaningful tasks (fine-grained) or feature sets and reduce the coupling (dependency) between tasks, which is the key part to make the whole workflow goes smoothly.”

- “Switching to Kanban in mid project hasn't been easy. some overhead was encountered getting everyone up to speed but project timelines weren't changed”

Pilot Project 3

- “The daily standups promote communication between my peers.”

- “Helpful to identify road blocks and the opportunity to resolve more quickly.”

- “Using Kanban, we can trace the project activities, and find the bottleneck (blocker) in the different phases.”

- “Business stakeholders wanting to drive Kanban activities before they are fully understood. Often the business PM is not clear and causes confusion.”

- “Clarify the role and responsibilities of the Kanban Champion in relation to the Project, how they interact with business - this is not clear and is causing confusion.”

- “I feel that we have a challenge to get blocker removed quickly and efficiently, and define the delivery timeline/milestone for each MMF.”